Why Software Developers Need Prompt Engineering Skills

Part 1 of the Generative AI series

The Context

Confession: I am a software engineer who has done very complex enterprise integrations at the workplace but never really worked on an AI/ML project.

Previously, I hadn't pursued ML model building or training, as I didn't consider it necessary for my role.

However, my current focus is on a Generative AI use case.

This entails leveraging AI services from a major cloud provider and using enterprise data to train the AI models.

Using the available documentation, I was able to build a prototype in a week's time. All I had to do was upload training data through the UI, train an existing model, and leverage the APIs to build the prototype. It was easy.

But there is one area I have been putting in a lot of work and that is getting the prompts right.

The prompts we are used to giving in ChatGPT v/s the prompts you'd need to solve business use cases are very different.

Unlike prompts on ChatGPT, generative AI in your business solution need to return a high-quality response with a single prompt.

And that's why you developers need to learn "Prompt Engineering" with a developer's mindset.

Generative AI is only as good as the 'prompt' it receives. Otherwise GIGO (Garbage in; garbage out).

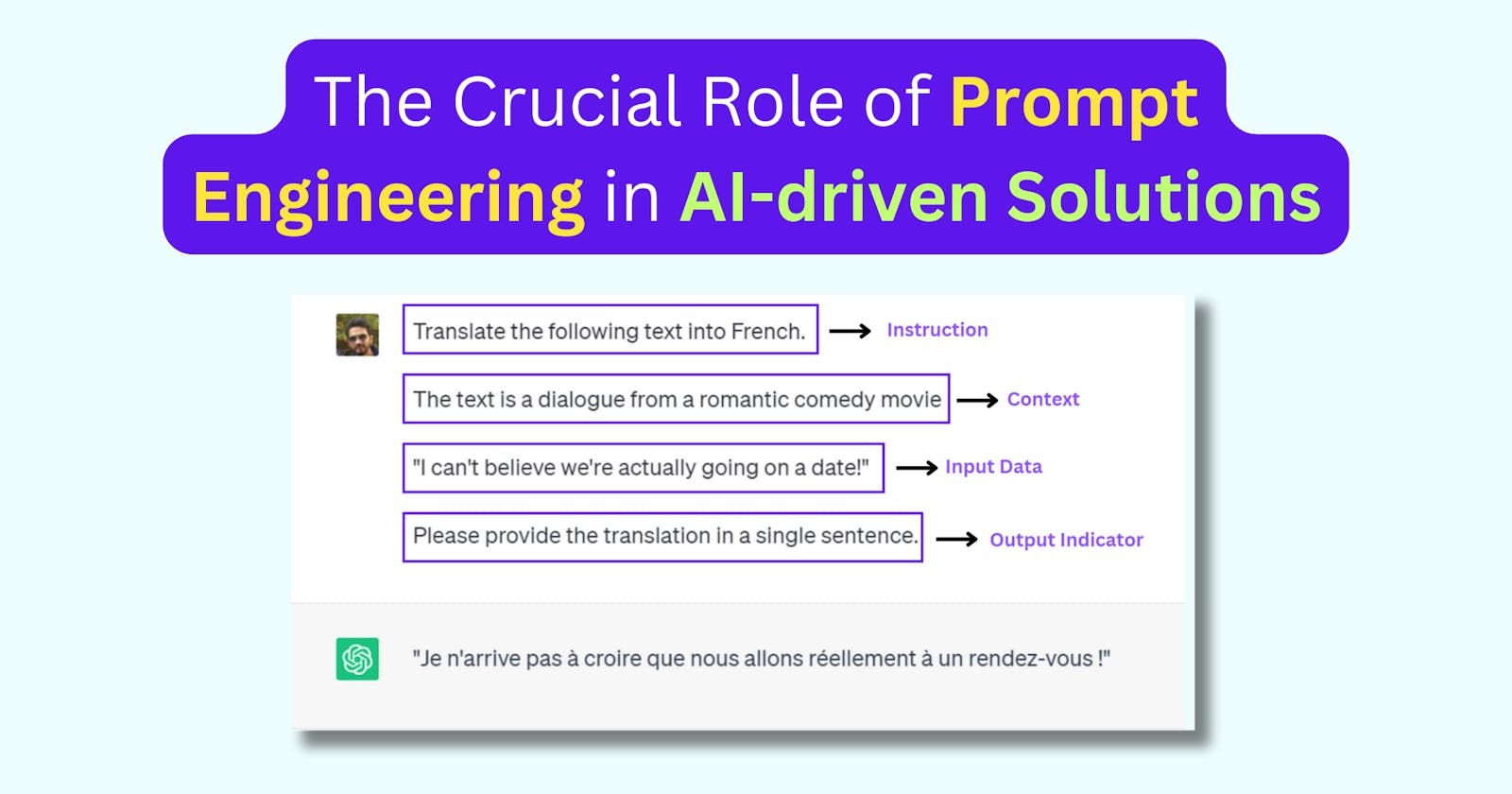

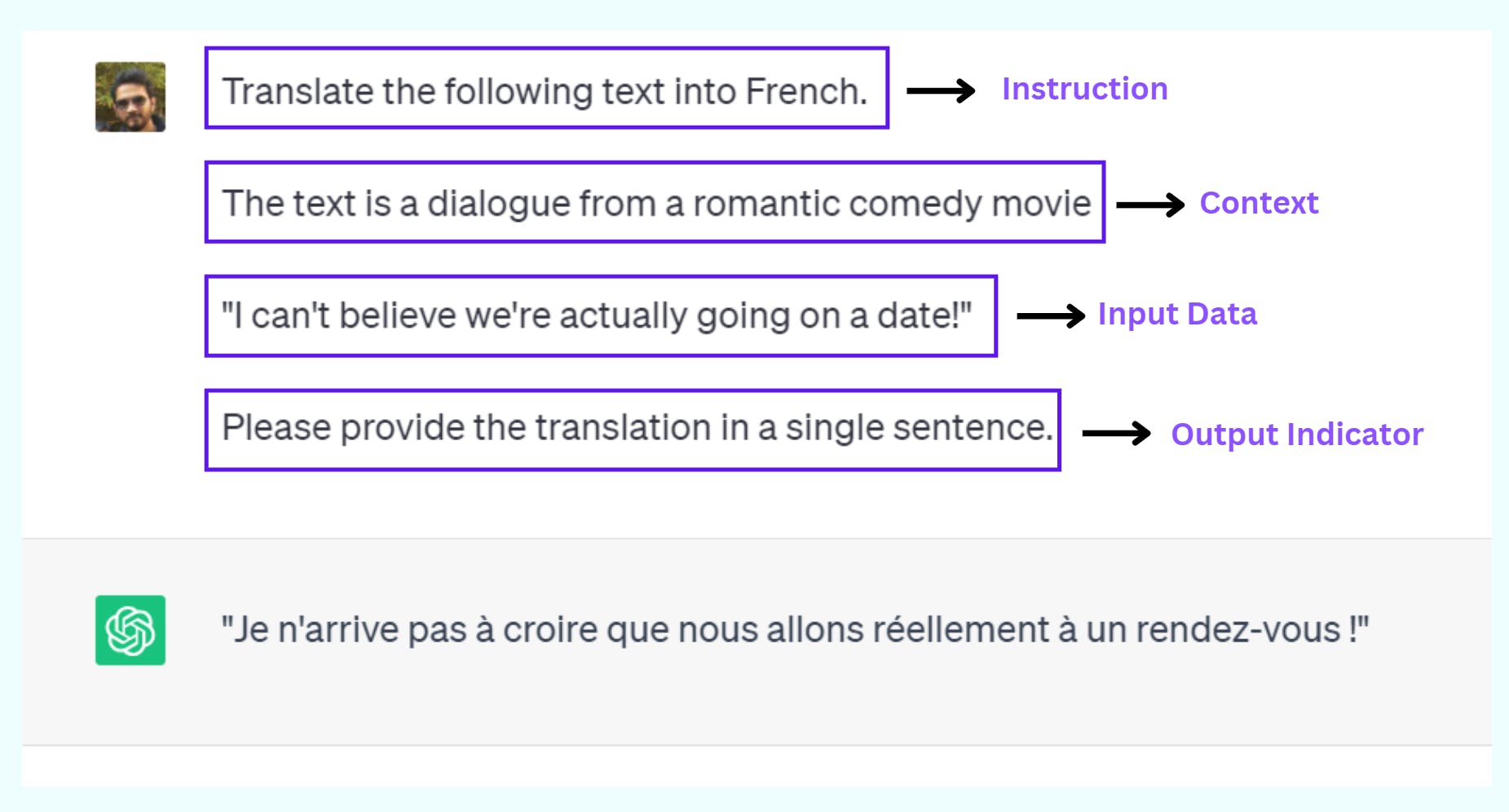

The Elements of a Good Prompt

📜 Instruction: This is what you tell the model to do. It could be something like "translate this text into French" or "come up with ideas for a science fiction story." The instruction sets the task for the model.

🔎 Context: This provides extra information to help the model understand the task. For example, if it's a translation task, you might mention that the text is a dialogue from a movie or a passage from a scientific paper. The context helps the model understand the style, tone, and specific details needed.

📥 Input data: This is the actual data the model works with. In a translation task, it's the text you want to translate. In a question-answering task, it's the question you're asking.

📤 Output indicator: This tells the model how to format its response. You can specify if you want a list, a paragraph, a single sentence, or any other structure. This helps the model generate a response that is more focused and useful.

Anatomy of an Engineered Prompt

BASIC ELEMENTS:

<Initiation> <Verbs> <Data-Input>SPECIFIERS:

<Output Format> <Output Tone> <Target Audience> <Temporal Specifier>

<Scope> <Constraints>

BASIC ELEMENTS:

🔍 <Initiation>: The starting point or context of the prompt, setting the stage for the task at hand. This sets the tone and context. An example would be to tell it to act as a certain profession

⚡ <Verbs>: Action words that define what needs to be done or the type of response required.

📊 <Data-Input>: Information or data used as input to generate the desired response. 📚

SPECIFIERS:

📝 <Output Format>: The expected structure or format of the response, such as a paragraph, list, or table.

🎨 <Output Tone>: The desired tone or style of the response, be it formal, informal, technical, or persuasive.

🎯 <Target Audience>: The intended audience for the response, whether general readers, experts, students, or a specific group.

⏰ <Temporal Specifier>: Time-related context or requirement for the response, involving specific time periods, events, or scenarios.

🌐 <Scope>: The boundaries or extent of the response, which can be narrow or broad in terms of focus and coverage.

⛓️ <Constraints>: Additional limitations or restrictions on the response, such as word limits or specific resources to be utilized.

Different Types of Prompting

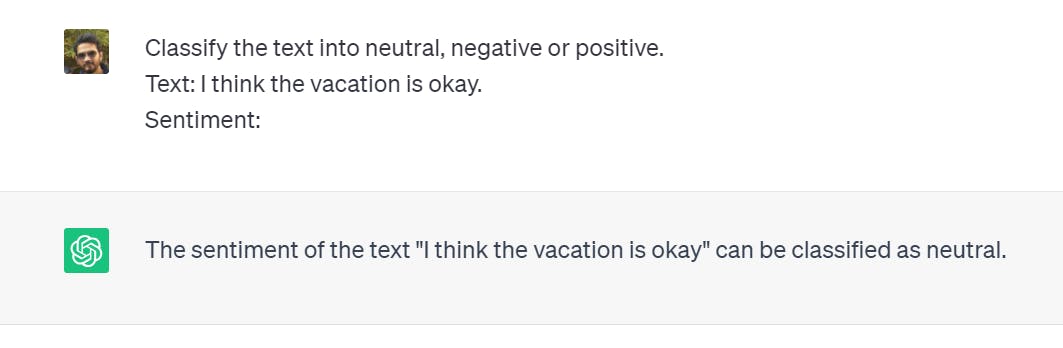

Zero-shot prompting

A technique where a language model is asked to generate a response or perform a task without any specific training examples or prior knowledge about that particular task. It relies on the model's ability to generalize and understand the prompt's instructions.

In the given prompt, the model is instructed to classify the sentiment of a text as neutral, negative, or positive without being explicitly trained on specific examples of text and their corresponding sentiment classifications.

Few-shot prompting

Few-shot prompting involves providing the language model with a small amount of training data or examples related to a specific task or topic. The model uses these limited examples to quickly adapt and generate responses or perform tasks more accurately than zero-shot prompting.

Chain-of-Thought Prompting

It involves providing a series of related prompts or questions to guide the language model's response and encourage it to generate a coherent and logical chain of thought. Each prompt builds upon the previous one, creating a cohesive narrative or argument.

Self-Consistency Prompting

It involves asking the language model to generate multiple responses or completions for a given prompt and then ranking them based on their internal consistency. The aim is to encourage the model to produce responses that are coherent and self-consistent.

Q: There are 15 trees in the grove. Grove workers will plant trees in the grove today. After they are done,there will be 21 trees. How many trees did the grove workers plant today? A: We start with 15 trees. Later we have 21 trees. The difference must be the number of trees they planted.So, they must have planted 21 - 15 = 6 trees. The answer is 6. Q: If there are 3 cars in the parking lot and 2 more cars arrive, how many cars are in the parking lot? A: There are 3 cars in the parking lot already. 2 more arrive. Now there are 3 + 2 = 5 cars. The answer is 5. Q: Leah had 32 chocolates and her sister had 42. If they ate 35, how many pieces do they have left in total? A: Leah had 32 chocolates and Leah’s sister had 42. That means there were originally 32 + 42 = 74chocolates. 35 have been eaten. So in total they still have 74 - 35 = 39 chocolates. The answer is 39.

In the following prompt, we provide multiple examples of a similar problem and the manner in which we would work to come up with the correct answer.

General Knowledge Prompting

It involves providing the language model with factual information or knowledge about a wide range of topics. This allows the model to generate accurate responses or answer questions based on its understanding of various domains.

Input: Greece is larger than mexico.

Knowledge: Greece is approximately 131,957 sq km, while Mexico is approximately 1,964,375 sq km, making Mexico 1,389% larger than Greece.Input: Glasses always fog up.

Knowledge: Condensation occurs on eyeglass lenses when water vapor from your sweat, breath, and ambient humidity lands on a cold surface, cools, and then changes into tiny drops of liquid, forming a film that you see as fog. Your lenses will be relatively cool compared to your breath, especially when the outside air is cold.Input: A fish is capable of thinking.

Knowledge: Fish are more intelligent than they appear. In many areas, such as memory, their cognitive powers match or exceed those of ’higher’ vertebrates including non-human primates. Fish’s long-term memories help them keep track of complex social relationships.Input: A common effect of smoking lots of cigarettes in one’s lifetime is a higher than normal chance of getting lung cancer.

Knowledge: Those who consistently averaged less than one cigarette per day over their lifetime had nine times the risk of dying from lung cancer than never smokers. Among people who smoked between one and 10 cigarettes per day, the risk of dying from lung cancer was nearly 12 times higher than that of never smokers.Input: A rock is the same size as a pebble.

Knowledge: A pebble is a clast of rock with a particle size of 4 to 64 millimetres based on the Udden-Wentworth scale of sedimentology. Pebbles are generally considered larger than granules (2 to 4 millimetres diameter) and smaller than cobbles (64 to 256 millimetres diameter).Input: Part of golf is trying to get a higher point total than others.

Knowledge:

Conclusion

Prompt Engineering is becoming a sub-discipline in AI - and not everything about Prompt Engineering can be covered in this blog.

If you want to go deeper into the above topics, please continue reading the resources below.

In Part 2, we will cover custom prompt engineering techniques that we need to learn when building Gen AI solutions.

Sources:

https://machinelearningmastery.com/what-are-zero-shot-prompting-and-few-shot-prompting/

https://github.com/dair-ai/Prompt-Engineering-Guide/blob/main/guides/prompts-chatgpt.md

I write about System Design, UX, and Digital Experiences. If you liked my content, do kindly like and share it with your network. And please don't forget to subscribe for more technical content like this.