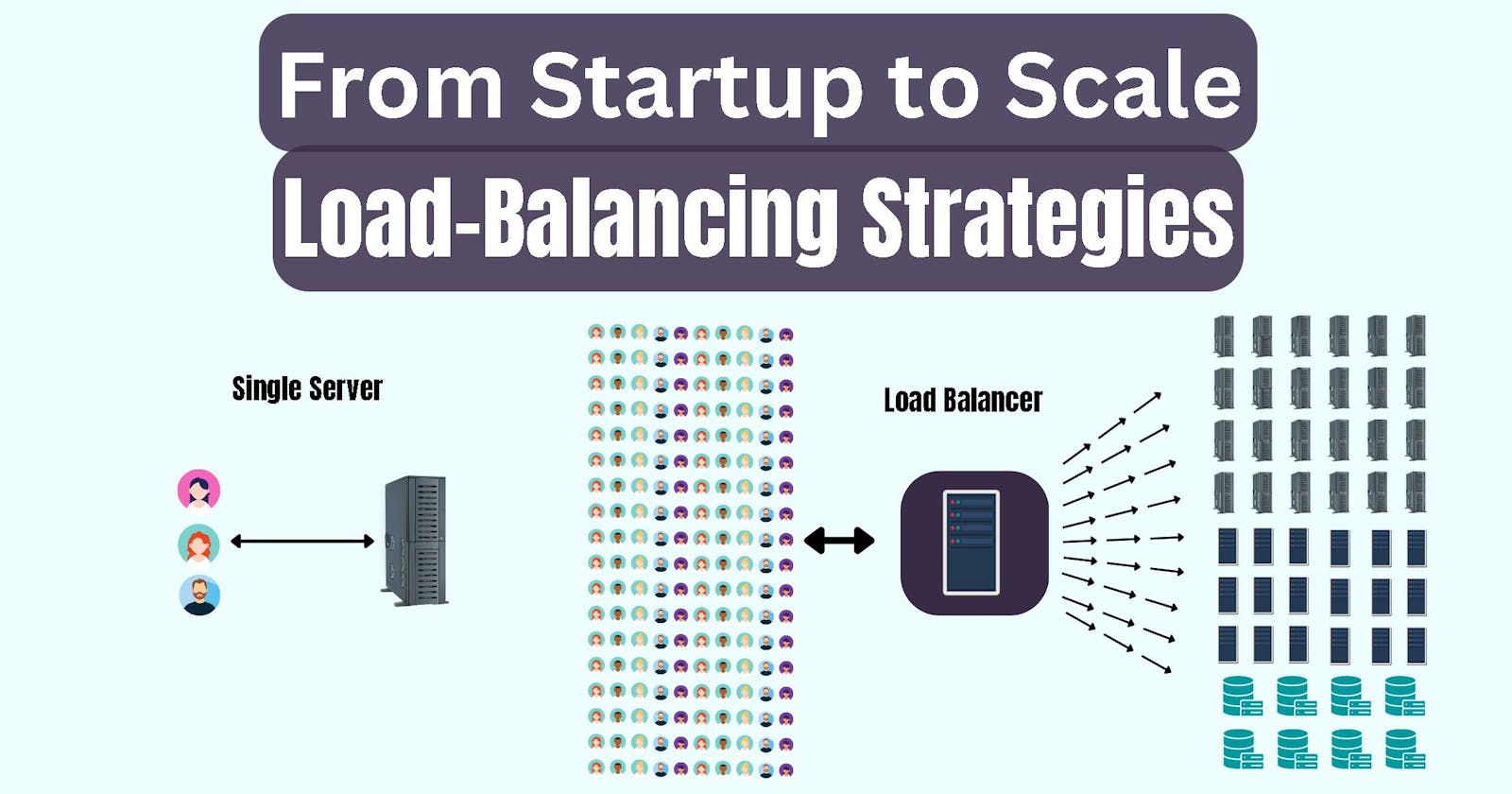

Scaling for Success: The Load Balancing Journey of a Fictional Startup

The Context

We will use the journey of a fictional startup to learn how load-balancing decisions are made at every phase of the growth of a startup.

The journey is oversimplified to cater to a wide variety of audiences.

The evolution of the architecture of the startup is out of the scope of this article.

Fictional startup: ProScheduler

Company size: 4

The fictional startup we'll be discussing in this article is a SAAS (Software as a Service) company that specializes in schedule management tools.

The company started small, with a few hundred users and a basic web application that helped individuals and small businesses manage their schedules more efficiently.

However, as the company's user base grew and its product matured, it quickly realized it needed to scale its infrastructure to handle the increased traffic.

At every phase of its growth, the company learned different Load Balancing techniques and scaled its app accordingly.

Phase 1: The Beginning

Traffic: ~300 requests/hour

Load Balancer: -

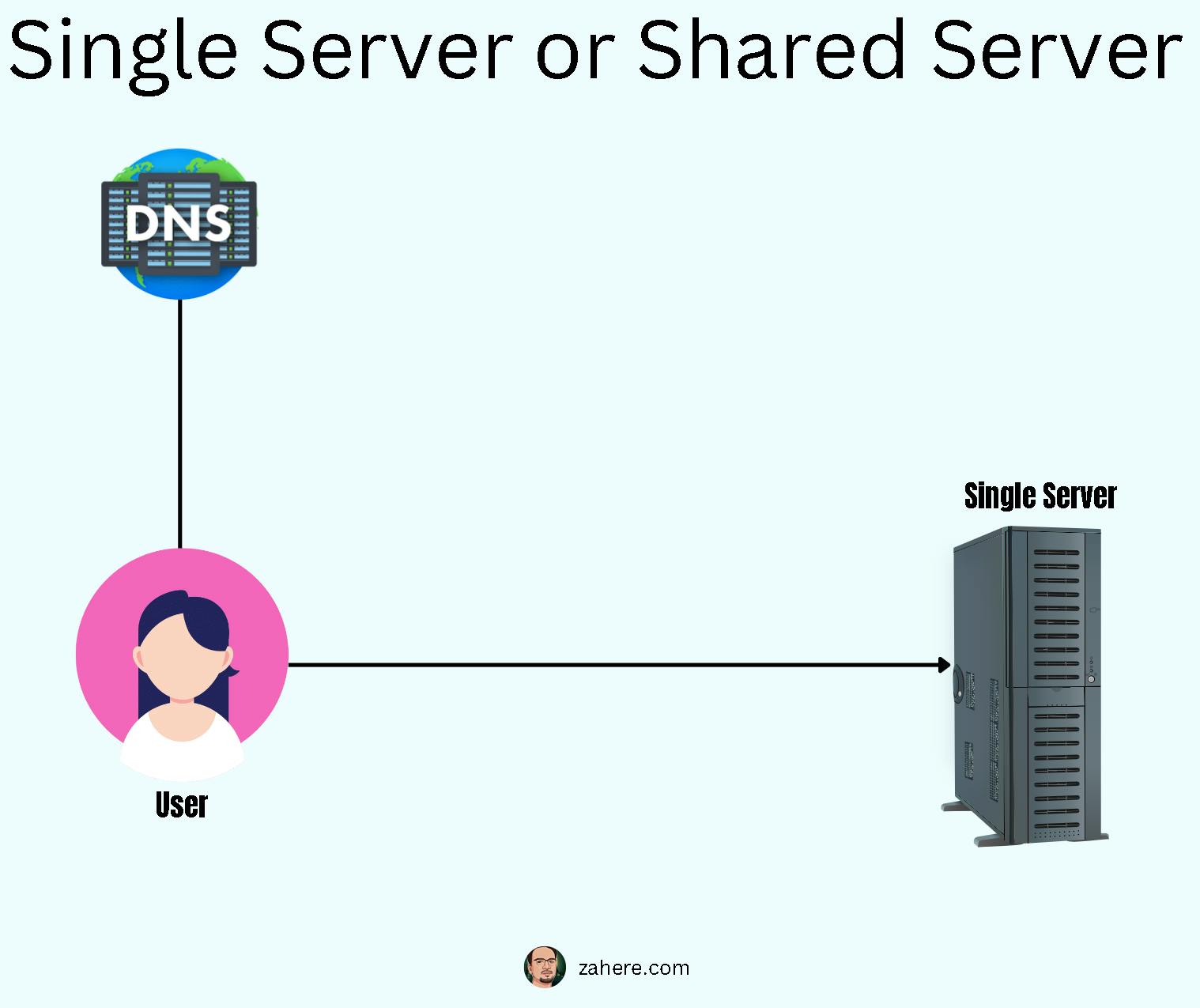

In the beginning, the startup had a small team and a basic web application. They didn't anticipate a lot of traffic. Their application, hosted on a single server, was easily supporting 300 requests/hour.

Traffic: ~5000 Requests/hour; Spikes on weekends

Load Balancer: DNS-based load balancer

As the number of users grew, they would see occasional spikes on weekends and Mondays, when the customers plan for the week.

The spikes were overwhelming the single server and they needed a way to ensure their customers are not impacted.

They introduced another server to balance the load.

What is load balancing?

Load balancing is a technique used to distribute workloads evenly across multiple servers, in order to optimize resource usage, minimize response time and avoid overloading any single server.

This can be accomplished in several ways such as

Splitting applications across multiple servers

Specialized components can be added to applications to balance processing requirements and improve performance

Additional servers can be created to collectively share the load, increasing the overall processing power available

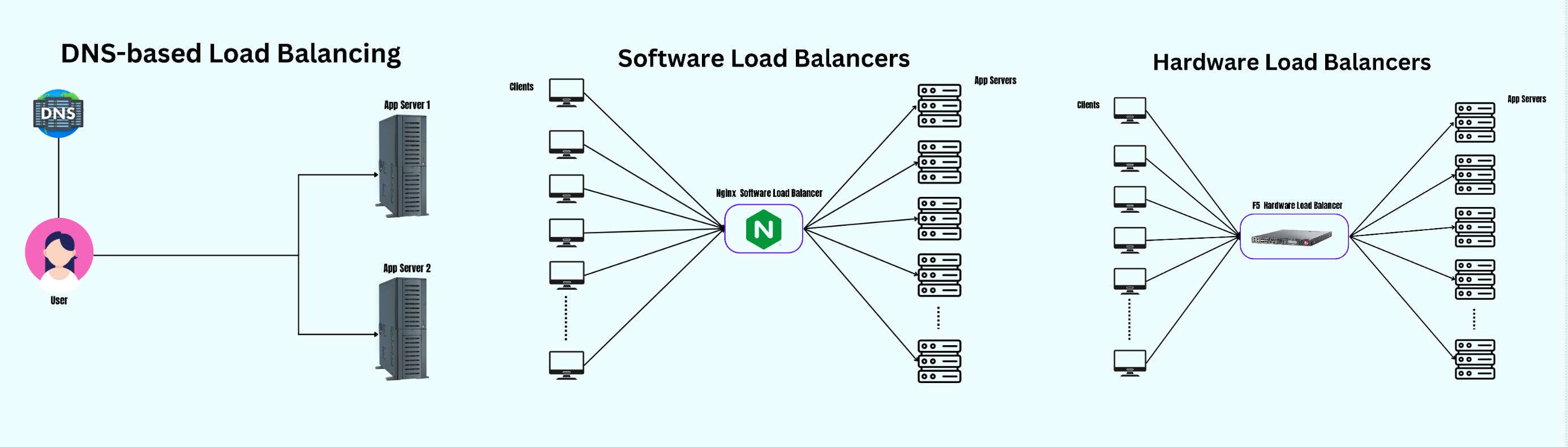

ProScheduler used a simple round-robin DNS load balancer to distribute traffic among the 2 servers.

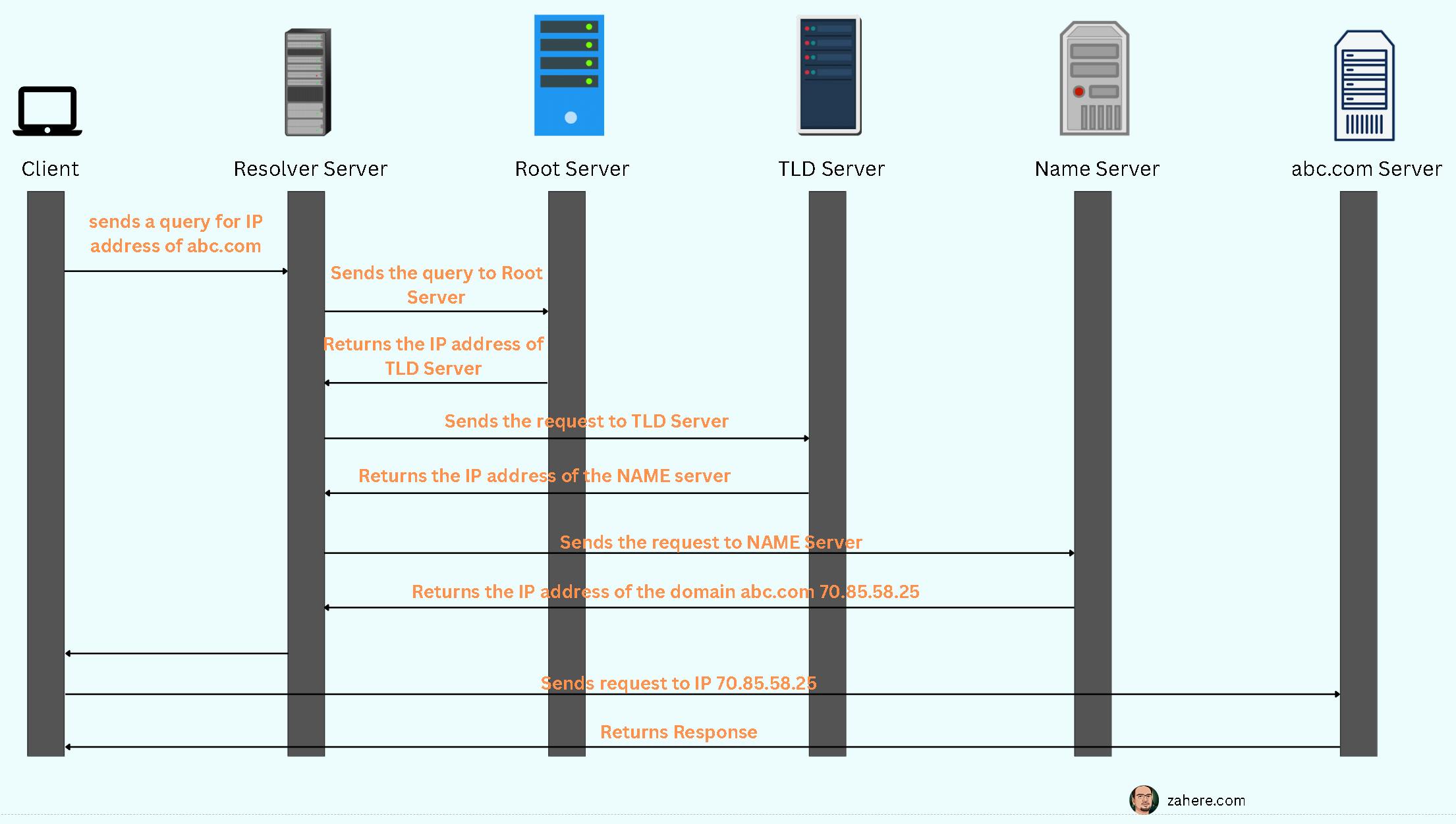

To understand DNS-based load balancing, it is important to understand how DNS lookup works

How DNS lookup works

A user types a URL (such as abc.com) into their web browser.

The browser sends a request to the local DNS resolver, which is typically provided by the user's internet service provider (ISP).

The local DNS resolver checks its cache to see if it has a recent copy of the DNS record for the requested domain.

If the local DNS resolver does not have a recent copy of the DNS record, it sends a request to the root DNS servers.

The root DNS servers respond with the address of the top-level domain (TLD) DNS servers (such as .com or .org) for the requested domain.

The local DNS resolver sends a request to the TLD DNS servers, which respond with the address of the authoritative DNS servers for the requested domain.

The local DNS resolver sends a request to the authoritative DNS servers, which respond with the IP address associated with the requested domain.

The local DNS resolver caches the DNS record and sends the IP address back to the browser.

The browser uses the IP address to establish a connection to the web server associated with the requested domain, and the website is displayed to the user

How does the DNS have records of both servers?

In DNS-based load balancing, the DNS server is configured with multiple records for the same domain name, each pointing to a different server.

The DNS server is typically configured by the system administrator or network administrator of an organization.

If a website is hosted by a 3rd-party hosting service provider like GoDaddy, the provider will typically set up and configure a DNS server on the customer's behalf and provide them with the necessary information to point their domain name to the provider's server.

This load balancer worked well for ProScheduler in the early stages as it was easy to set up and required minimal maintenance.

However, as the startup began to gain traction, it became clear that it needed to scale its infrastructure to handle more traffic.

Phase 2: Scaling up

As the startup began to gain more users, it needed to scale its infrastructure to handle the increased traffic.

It explored different types of load balancers and load-balancing techniques.

What are the different types of load balancers?

They opted for software-based Layer 4 load balancers, such as NGINX, which could handle more connections and provide basic load-balancing capabilities.

These load balancers work at the network level and can distribute traffic based on IP address and port number.

Traffic: ~ 500-1000 requests/second

Load Balancer: Software Load Balancer (NGINX)

What is NGINX?

NGINX (pronounced "engine-x") is an open-source, high-performance web server and reverse proxy software.

It is often used as a web server, load balancer, and reverse proxy, and it can also be used as a mail proxy and HTTP cache.

NGINX is designed to handle a large number of concurrent connections, making it a popular choice for high-traffic websites and web applications.

How does NGINX work?

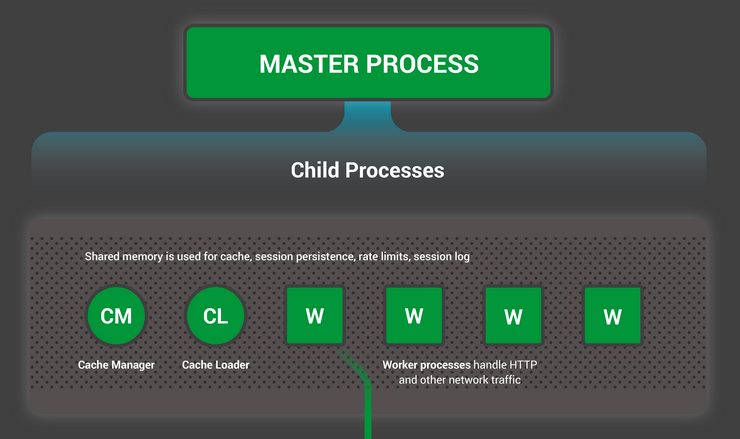

The NGINX architecture can be divided into 3 main components

Master

Worker

Cache

Master process: The NGINX master process is responsible for reading the configuration files, spawning worker processes, and handling signals. It doesn't handle client connections or perform any processing of its own.

Cache processes: Proxy caches have a cache loader and manager. The cache loader checks the disk cache item and populates the engine’s in-memory database with the cache metadata.

Worker processes: The NGINX worker processes are responsible for accepting and processing client connections. Each worker process can handle multiple connections simultaneously using an event-driven or asynchronous model.

Phase 3: Growing pains

As the ProScheduler's user base grew and the demand for its schedule management tool increased, it began to experience growing pains in terms of scalability and performance.

To address these issues, the startup decided to adopt a microservices architecture.

As the number of users and requests increased, the startup began to see heavy traffic on certain services, such as the user management service and the scheduling service.

To ensure that these services could handle the increased traffic, the startup implemented an F5 BIG-IP load balancer.

The F5 BIG-IP load balancer is used to distribute incoming traffic among multiple servers running each service.

By using a Layer 7 load balancer, the BIG-IP is able to route traffic based on the content of the request, rather than just the IP address or port.

This allows the BIG-IP to route traffic to the appropriate service based on the functionality being requested.

To handle the increased traffic, the startup configured the BIG-IP to use specific load balancing algorithms such as Least Connections and IP Hash, which are optimized for handling high-traffic loads.

Traffic: ~ 2000-3000 requests/second

Load Balancer: Hardware Load Balancer (F5 BIG-IP)

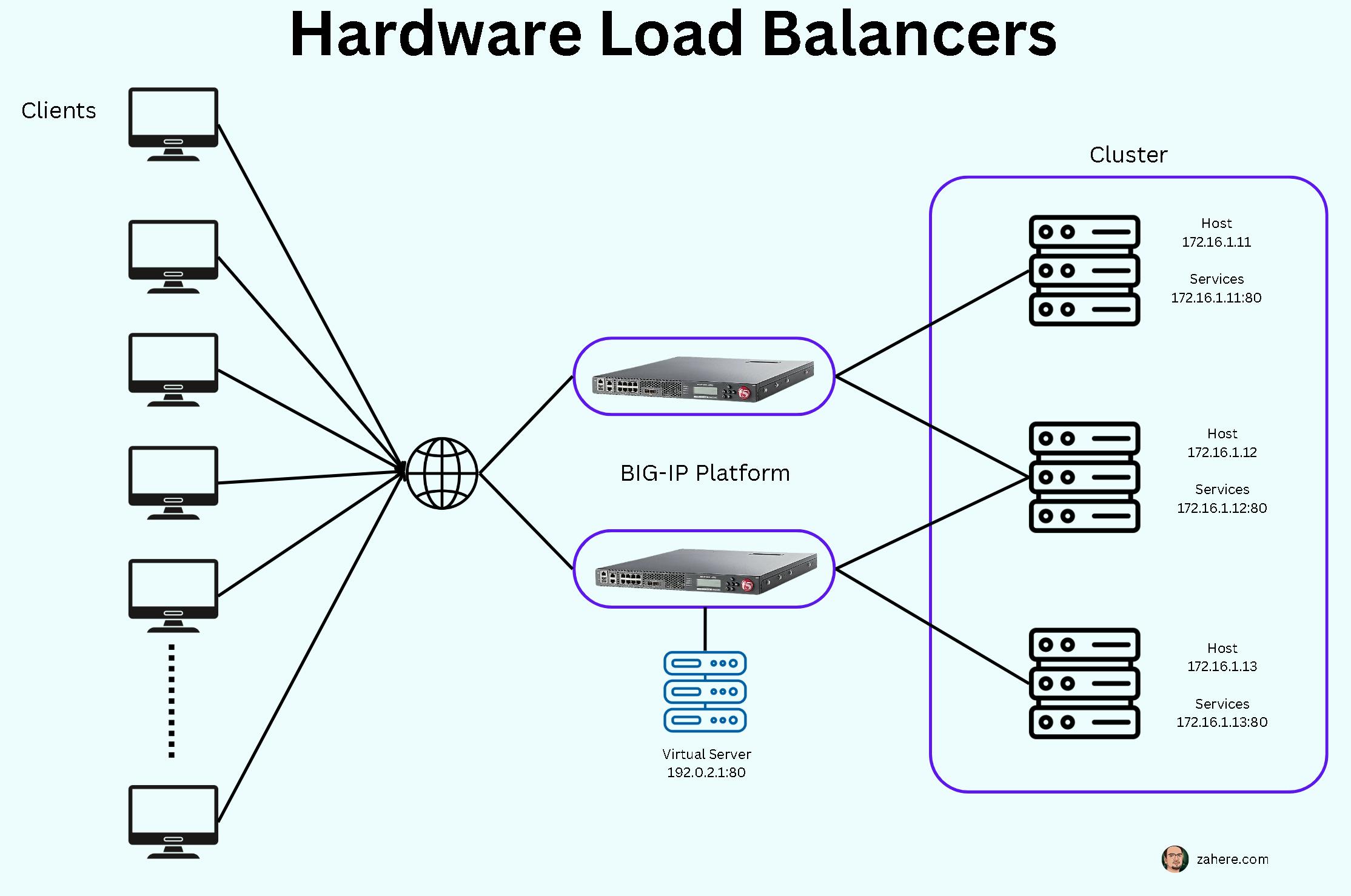

What is F5 BIG-IP?

F5 BIG-IP is a hardware-based load balancer and application delivery controller (ADC) that helps to improve the availability, performance, and security of web applications.

Some terms to understand.

Host: A host can be a physical server or a virtual machine running on a hypervisor.

Service: A web server/application server running in the host and exposed through a port

Cluster: A collection of hosts

Virtual Server: A virtual server is a proxy of the actual server (physical, virtual, or container). Combined with a virtual IP address, this is the application endpoint that is presented to the outside world.

The basic application delivery transaction is as follows:

The client attempts to connect with the service.

The ADC accepts the connection, and after deciding which host should receive the connection, changes the destination IP (and possibly port) to match the service of the selected host (note that the source IP of the client is not touched).

The host accepts the connection and responds back to the original source, the client, via its default route, the ADC.

The ADC intercepts the return packet from the host and now changes the source IP (and possible port) to match the virtual server IP and port, and forwards the packet back to the client.

The client receives the return packet, believing that it came from the virtual server, and continues the process.

What are the different types of load-balancing algorithms?

There are several load-balancing algorithms, the most commonly used are...

Round Robin*: In this algorithm, traffic is distributed in a round-robin fashion, where each server in the pool is given an equal opportunity to handle incoming requests.*

Least Connections*: In this algorithm, traffic is distributed based on the number of connections each server currently has. The server with the least number of connections is given the next incoming request.*

IP Hash*: In this algorithm, traffic is distributed based on the source IP address of the incoming request. The load balancer uses a hash function to map the source IP address to a specific server in the pool.*

Weighted Round Robin*: This algorithm works like the round-robin algorithm, but each server is assigned a weight, which determines how much traffic it should receive.*

The Layer 7 load balancer provided several benefits for the startup.

For one, it allowed them to distribute traffic based on more advanced rules, which helped to improve the application's performance and reduce downtime.

Additionally, it provided more advanced load balancing capabilities, such as content-based routing and rate shaping, which helped to distribute traffic more efficiently.

Phase 4: Scaling globally

As the startup became a popular destination on the internet, it needed to further scale its infrastructure to handle even more traffic.

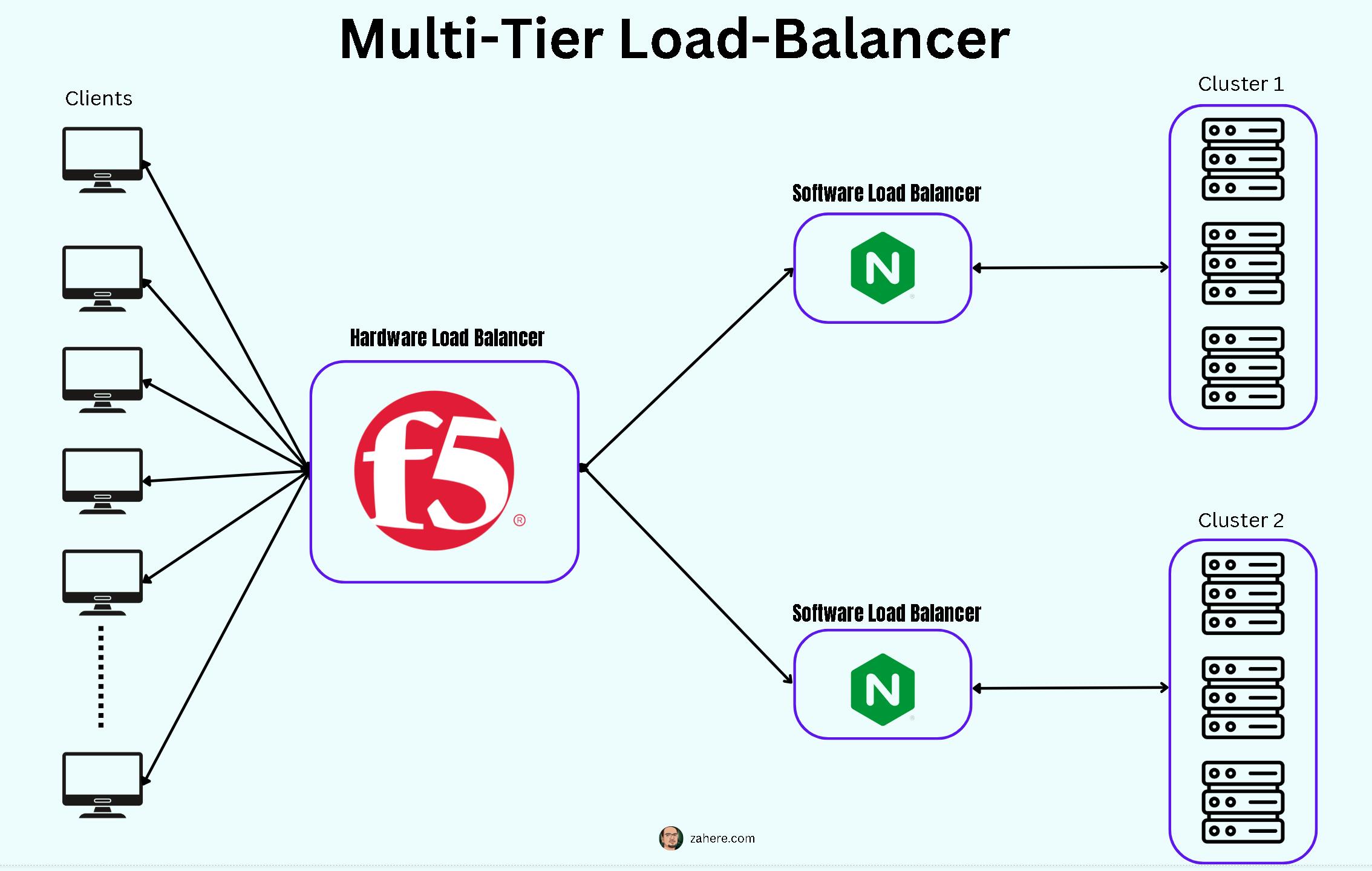

They implemented a multi-tier load balancing architecture, with a Layer 7 load balancer in front and multiple Layer 4 load balancers behind it, to distribute traffic to multiple web server clusters.

Traffic: ~ 10000 - 15000 requests/second

Load Balancer: Multi-tier Load Balancer (F5 BIG-IP + NGINX)

This approach allowed them to improve the performance and reliability of their application while also reducing downtime.

The multi-tier load-balancing architecture provided several benefits for the startup.

For one, it allowed them to distribute traffic more efficiently across multiple web server clusters, which helped to improve the application's performance and reduce downtime.

Additionally, it provided more advanced load balancing capabilities, such as content-based routing and rate shaping, which helped to distribute traffic more efficiently.

Phase 5: Expanding globally

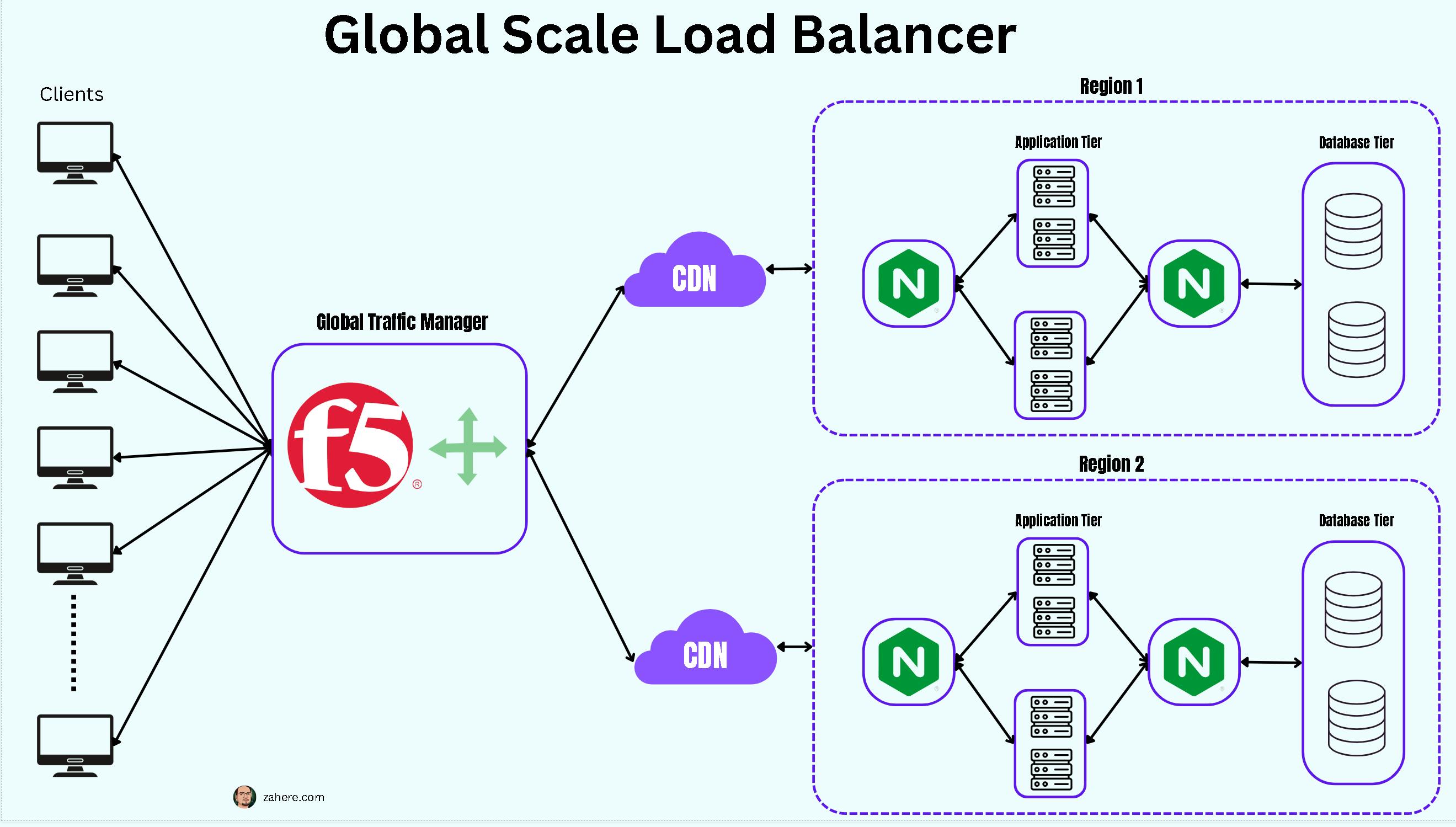

As the startup expanded globally, it faced new challenges in terms of managing and distributing traffic effectively.

To address these challenges, the startup implemented a combination of a traffic manager, a Content Delivery Network (CDN), and regional data centers.

The traffic manager, such as F5 BIG-IP Global Traffic Manager (GTM), is a DNS-based load balancing solution that allows the startup to manage and distribute traffic globally by directing clients to the closest or best-performing server based on their location.

The startup also implemented a CDN (they could also use 3rd party vendors), which is a network of servers that are distributed across multiple locations around the world.

To further improve performance and availability, the startup also set up regional data centers in strategic locations around the world.

This allows the startup to store data and run services closer to users, reducing the latency and improving the performance of the application.

In conclusion, the fictional startup's journey illustrates the importance of load balancing and traffic management in ensuring the availability and performance of web applications and services, and how the solution has to be dynamic and adaptive to the growth and the challenges that come along with it.

I write about System Design, UX, and Digital Experiences. If you liked my content, do kindly like and share in your network. And please don't forget to subscribe for more technical content like this.

Resources:

https://www.nginx.com/blog/inside-nginx-how-we-designed-for-performance-scale/

https://www.f5.com/services/resources/white-papers/load-balancing-101-nuts-and-bolts