Please pay attention to the numbers that I am about to call out coz they are going to blow your mind.

The insanely popular ChatGPT was trained on a whopping 175B parameters. In fact, 300 billion words were fed into the system.

In the context of machine learning and neural networks, "parameters" refer to the values that determine the behavior of a model. These values are often represented as weights or biases.

Training a language model on 175B parameters requires a significant amount of energy and computing power - and this is where Microsoft’s Supercomputer comes in.

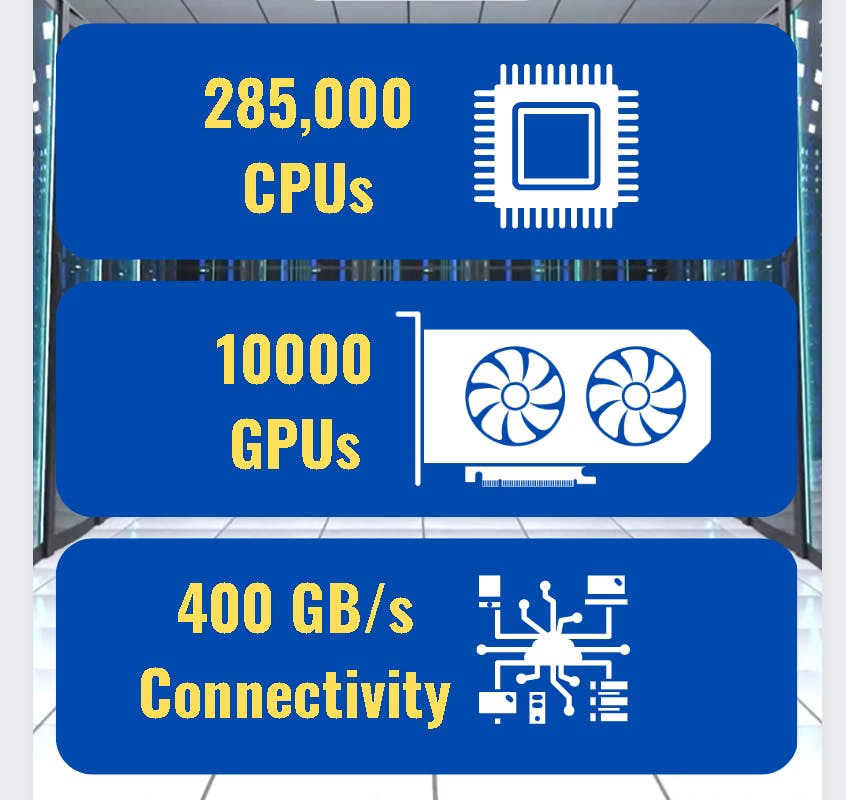

In 2020 Microsoft collaborated with Open AI to create, what they claim to be one of the most powerful supercomputers of all time.

It has 285,000 processor cores, 10,000 GPUs, and 400 gigabits per second of connectivity for each graphics card server.

To put things in perspective, the M1 Max chip in the Mackbook delivers 10.40 Teraflops, whereas Microsoft's supercomputer, on which the GPT3.5 was trained, delivers 100.7 petaflops of performance.

100.7 petaflops is equal to 100,700,000,000,000,000 floating point operations per second. This is equivalent to 100.7 x 1,000 teraflops, or 100,700 teraflops.

This supercomputer is roughly equal to 100,700 MacBooks

If you liked what you read, please subscribe for interesting articles on System Design, UI/UX, and engineering careers.

Related Articles: https://zahere.com/how-chatgpt-works-the-architectural-details-you-need-to-know

Source: https://venturebeat.com/ai/openai-microsoft-azure-supercomputer-ai-model-training/