Designing a Live Streaming Service for 20 million concurrent users

Part 2

Note: Part 1 of the Live Streaming Service design is here

Live Streaming Service MVP

In Part 1, we designed a Live Streaming Service MVP component-by-component.

Let's scale this to 20M+ users

Hypothetical scenario

- Event: Cricket Match India vs Pakistan

- Location: Adelaide, Australia

Let's focus on 2 workflows

- Ingestion: Video Feed to Distribution

- Playback: Player to Distribution servers

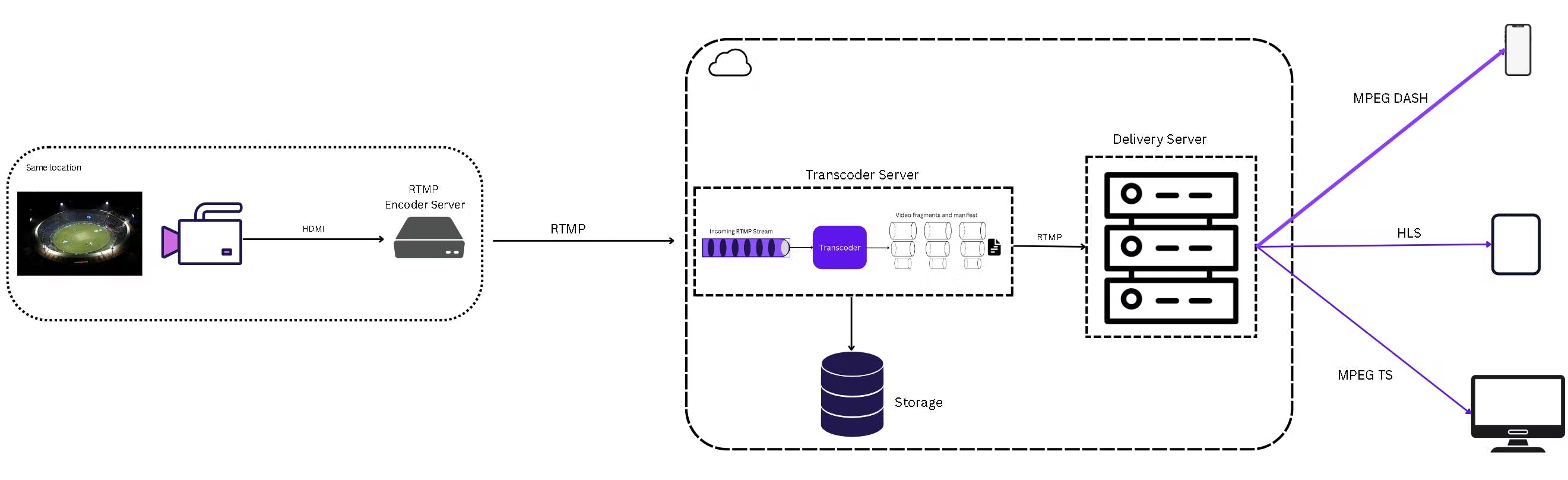

Ingestion is a CPU-intensive process and for easy streaming over the network, the RTMP stream needs to be converted into one-second chunks each for multiple bitrates.

Let's revisit the Transcoder Server with this illustration

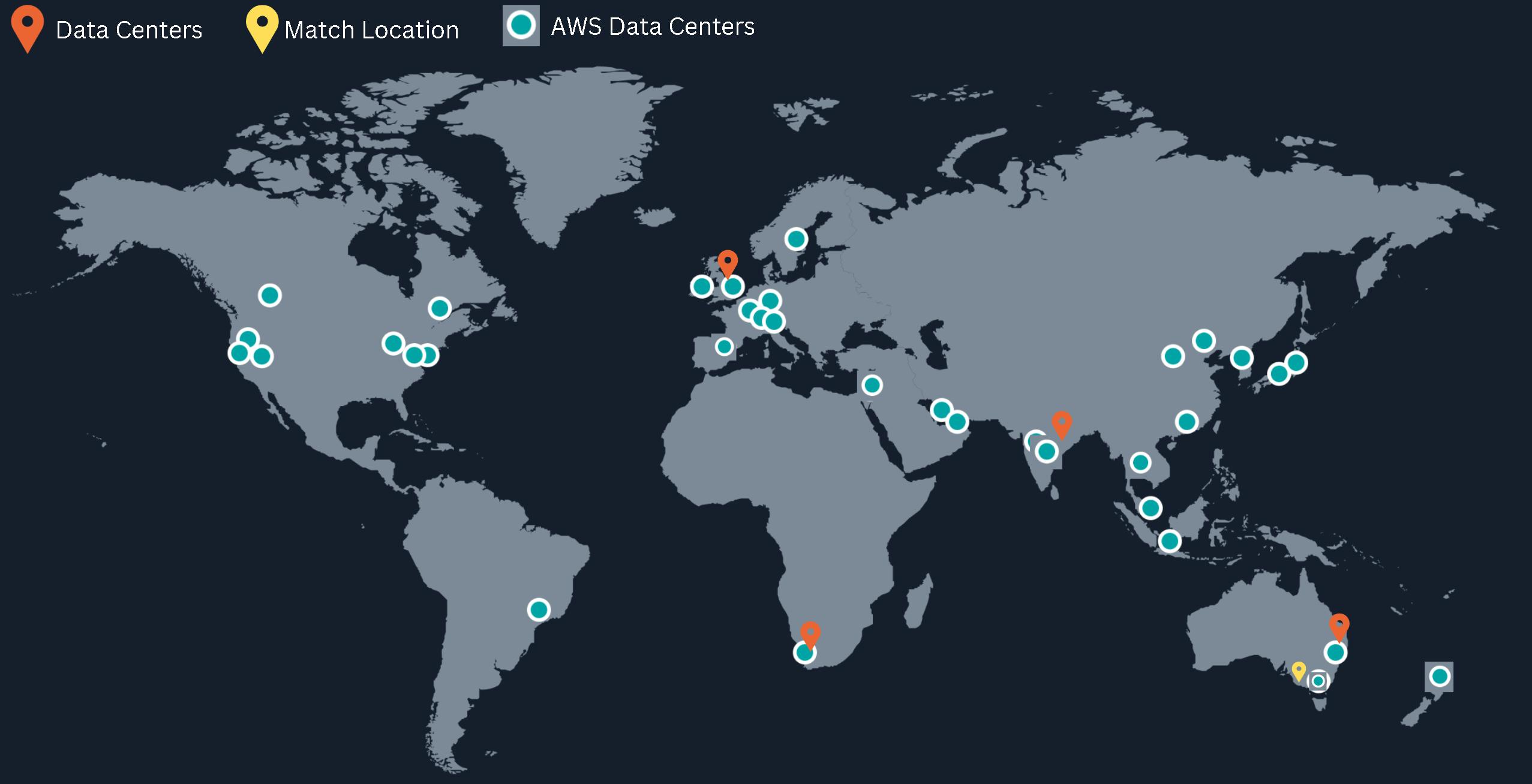

A global sports event streaming company can either own its data centers (DCs) across the globe in strategic locations or outsource the infrastructure to a vendor like AWS.

Either way - all data centers have streaming servers positioned to take in the incoming feed.

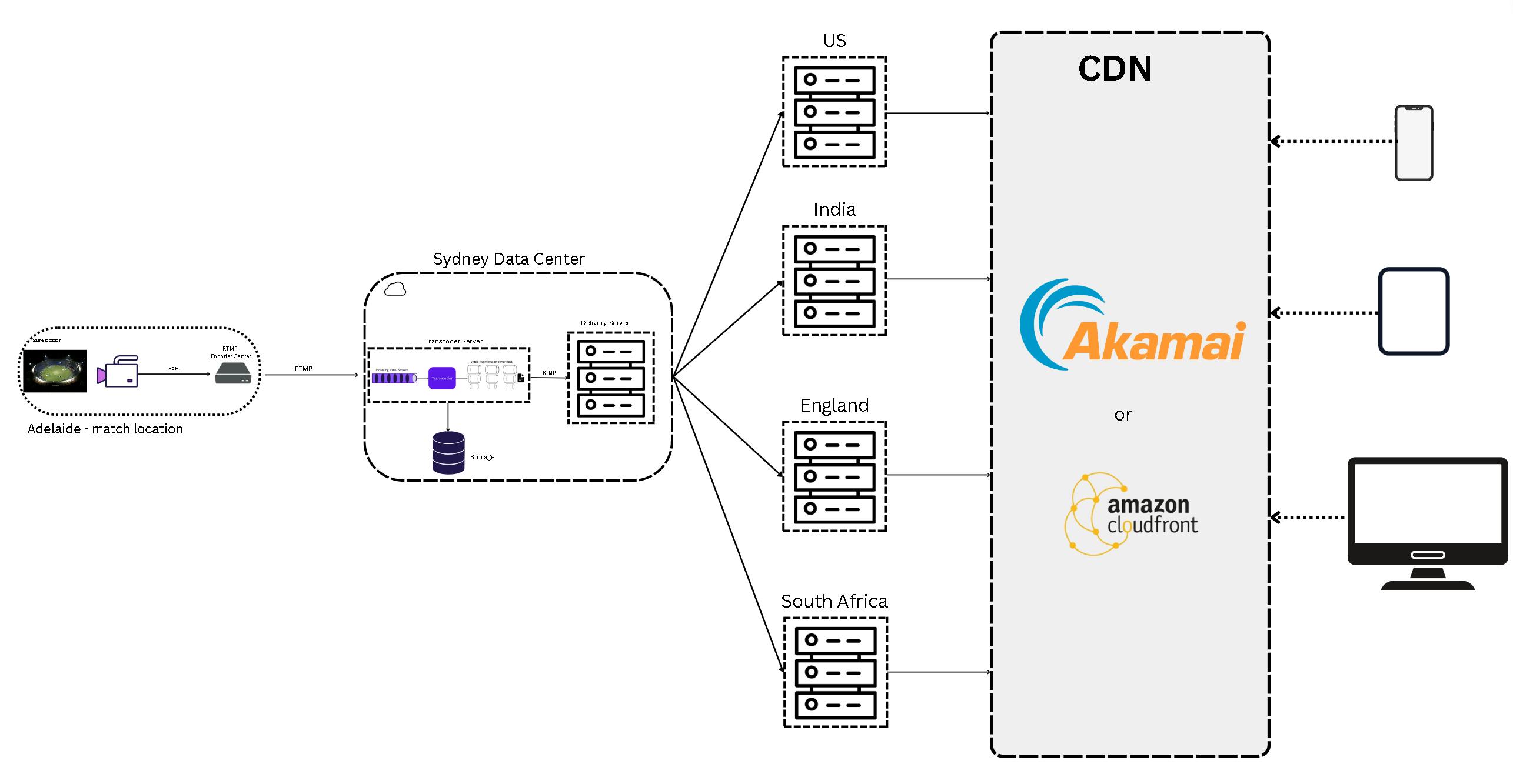

The video production team at the Adelaide cricket stadium initiates the stream and connects with the streaming server API in the nearest Data Center in Sydney. They get a stream ID from the streaming server.

The servers transcode the video into multiple formats and bitrates and push the chunked videos to multiple host servers of multiple data centers across the globe.

By 'caching' the video segments to multiple DCs, the load on the source server is definitely managed - but this is good enough for a few hundred users only.

But, in a high-stake match like India vs Pakistan, the traffic could surge dramatically at specific moments in the match.

Too many requests, on a few DCs, can stampede the system, causing lag, dropout, and disconnection from the stream.

The “thundering herd” problem

This is where CDN comes in.

Instead of having clients connect directly to the live stream server, they are routed to the CDN edge servers.

CDN edge servers can either Pull the data from the source data centers or the data centers can Push the video segments to the CDN servers across the globe.

When a player connects with an CDN server, The segment request is handled by one of the HTTP proxies at the CDN PoP that checks to see whether the segment is already in an edge cache.

If the segment is in the cache, it’s returned directly from there. If not, the proxy issues an HTTP request to the regional DC cache. If the segment is not in the DC cache, then it needs to request to the server handling that particular stream at the origin.

This way 90% of the request are handled by CDN servers eliminating the load on the client.

If you liked what you read, please subscribe for interesting articles on Blazor, Web Performance, Usability, and Frontend System Design.