If you’re curious about how to build an intelligent agent using Model Context Protocol (MCP), you’re in the right place.

In this post, I’ll walk you through how to:

Create an MCP Server using FastMCP

Expose a tool that calculates BMI

Build a Client that communicates with this server via stdio

Use OpenAI’s GPT model to decide which tool to call and how to call it

Let’s break this down line by line — code and concept.

What is MCP?

Before diving into code, let’s understand what MCP is.

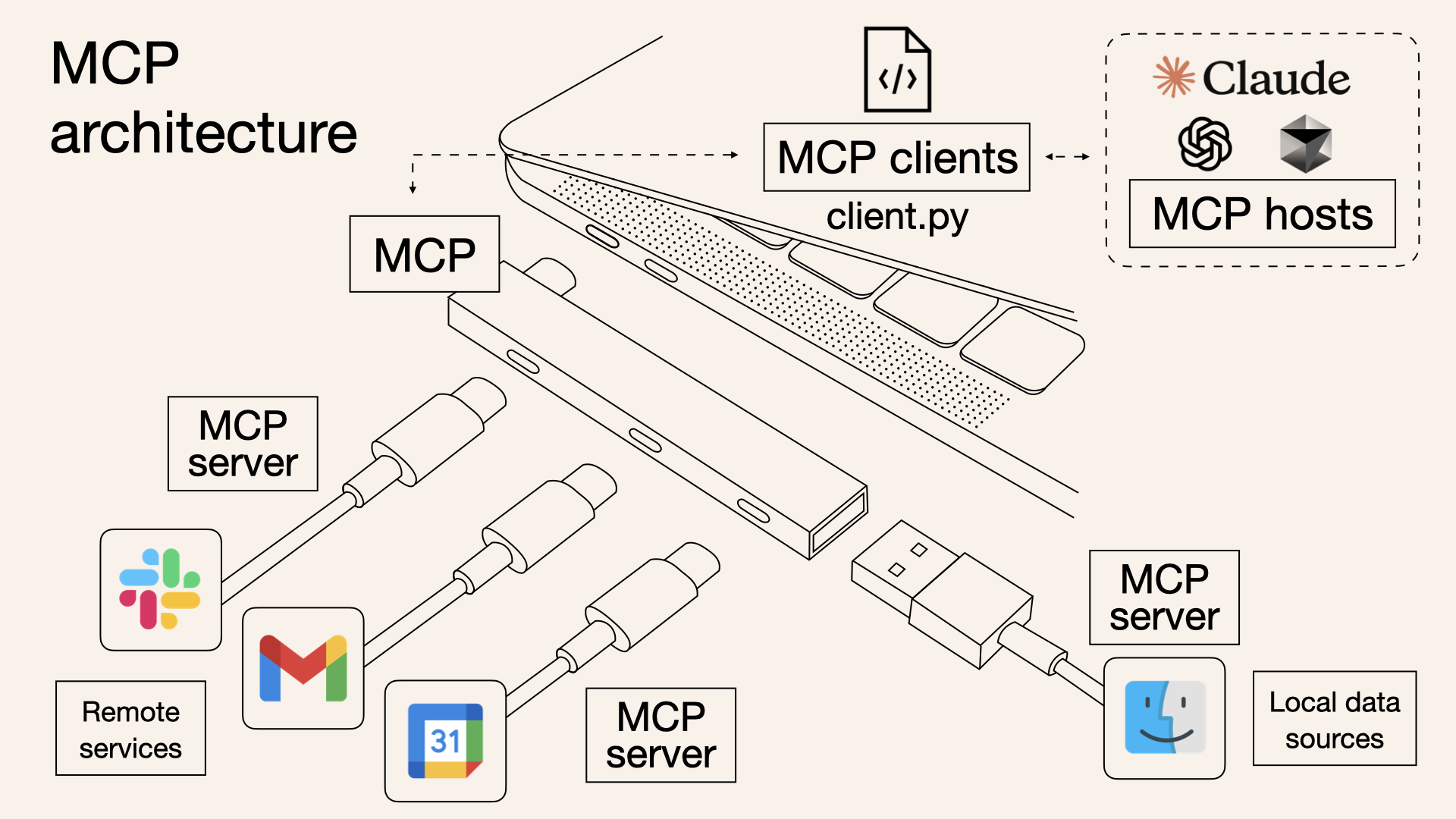

MCP (Model Context Protocol) is an open protocol developed by Anthropic to standardize how LLMs interact with tools. Think of it as the USB-C of AI apps — a universal way to connect and interact with tools, APIs, and services without writing tons of custom glue code.

Here’s a simple analogy:

USB-C Port: One port to rule them all — display, power, storage.

MCP: One protocol to access tools — calculators, search, databases, or any custom service.

By adopting MCP, we avoid the pain of writing one-off integration code for every LLM interaction. Instead, we define tools once and let the LLM figure out how to use them.

🛠️ Project Overview

We’ll build two things:

An MCP Server that exposes a simple tool to calculate BMI.

An MCP Client that communicates with the server via an LLM (like OpenAI GPT) and invokes the tool.

Let’s get started.

MCP Server

There are just 2 dependencies we need to install

pip install "mcp[cli]"

pip install openai

Let’s create a file called bmi_server.py.

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("BMI Server")

print(f"Starting server {mcp.name}")

@mcp.tool()

def calculate_bmi(weight_kg:float, height_m:float) -> float:

"""

Calculate BMI given weight in kg and height in meters.

"""

if height_m <= 0:

raise ValueError("Height must be greater than zero.")

return weight_kg / (height_m ** 2)

if __name__ == "__main__":

mcp.run(transport="stdio")

What’s happening here?

We use the FastMCP class to create the MCP server

We create a BMI tool using the

@mcp.tool()decorator.It takes weight and height and returns BMI.

The server exposes this tool using standard input/output transport.

We want this file to be run independently and not as a module

Obviously, this is a fundamental MCP server, but this should get us started on building our own MCP client.

MCP Client

Now, let’s create the client in a file named bmi_client.py.

Import all the dependencies. The key ones here being ClientSession, StdioServerParameters and stdio_client from the mcp package and OpenAI class to communicate with the LLM.

import asyncio

from openai import OpenAI

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

import os

import json

We need to now establish a way to communicate with the server we just wrote. Let’s use the StdioServerParameters class from the mcp package to do that.

server_params = StdioServerParameters(command="python", args=["bmi-server.py"])

Essentially, we are telling the client what command to run and how to use the server file.

Let’s write a generic method that to communicate with an LLM API. This should simply take a prompt and return a response.

def llm_client(message:str):

"""

Send a message to the LLM and return the response.

"""

# Initialize the OpenAI client

openai_client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

# Send the message to the LLM

response = openai_client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role":"system",

"content":"You are an intelligent assistant. You will execute tasks as prompted",

"role": "user", "content": message}],

max_tokens=250,

temperature=0.2

)

# Extract and return the response content

return response.choices[0].message.content.strip()

We need to write a prompt that does 2 things

1. Share the context with the LLM about the tools it has at its disposal

2. Instruct a structured output so that we can execute the tool the LLM selected

def get_prompt_to_identify_tool_and_arguments(query,tools):

tools_description = "\n".join([f"- {tool.name}, {tool.description}, {tool.inputSchema} " for tool in tools])

return ("You are a helpful assistant with access to these tools:\n\n"

f"{tools_description}\n"

"Choose the appropriate tool based on the user's question. \n"

f"User's Question: {query}\n"

"If no tool is needed, reply directly.\n\n"

"IMPORTANT: When you need to use a tool, you must ONLY respond with "

"the exact JSON object format below, nothing else:\n"

"Keep the values in str "

"{\n"

' "tool": "tool-name",\n'

' "arguments": {\n'

' "argument-name": "value"\n'

" }\n"

"}\n\n")

We are passing two arguments - the original query from the user and the list of tools from the server(s). Let’s now see how get the list of tools from the server.

async def run(query: str):

async with stdio_client(server_params) as (read, write):

async with ClientSession(read,write) as session:

await session.initialize()

# Get the list of available tools

tools = await session.list_tools()

print(f"Available tools: {tools}")

prompt = get_prompt_to_identify_tool_and_arguments(query,tools.tools)

llm_response = llm_client(prompt)

print(f"LLM Response: {llm_response}")

tool_call = json.loads(llm_response)

result = await session.call_tool(tool_call["tool"], arguments=tool_call["arguments"])

print(f"BMI for weight {tool_call["arguments"]["weight_kg"]}kg and height {tool_call["arguments"]["height_m"]}m is {result.content[0].text}")

Key things happening here:

We start the BMI Server process using

stdio_client, and get access to its read and write streamsNext, we create a session and initialize it. This sets up the communication between our client and the server.

We then ask the server for a list of all available tools. In our case, it will return the

calculate_bmifunction we exposed earlier.“We prepare a detailed prompt using the earlier function, passing in the user's query and available tools. This prompt helps the language model figure out what tool to call and what arguments to pass.

We parse the JSON response, extract the tool name and arguments, and then call the tool using

session.call_tool.Finally, we return the output from the tool call — in this case, the calculated BMI.

To make all of the above work, we will add an entry point. When this script is run, it sets the user query — here we ask to calculate BMI — and runs the run() function using asyncio. The final result is printed on screen!

if __name__ == "__main__":

import asyncio

query = "Calculate BMI for height 5ft 10inches and weight 80kg"

print(f"Sending query: {query}")

result = asyncio.run(run(query))

print(f"Result: {result}")

And that’s how you build an intelligent MCP client that can call tools dynamically using OpenAI and a BMI server. The magic lies in combining tool discovery, LLM prompting, and tool invocation — all within a simple and elegant flow.